Challenges on Accelerating ML Inference on an Edge device

Recommended techniques for deploying AI at the edge

In 1965 Intel co-founder Gordon Moore published a paper where he predicted that the number of transistors on an integrated circuit will double every 10 years. Two years later, he revised his prediction to doubling every two years. This is what we know today as the Moore's Law and its grounded in an emerging trend, has served as a guiding principle for the semiconductor industry for nearly 60 years.

The law has an inevitable limit. You can continue making something smaller and smaller...but only up to a certain point. Then another ways of innovation are required to make more powerful and optimal processors.

Over the years, the demand of more compute resources has been undeniable. With the explosion of what we know today as the internet in the 90's, triggered a surge in the volume of digital data. Major companies like google realize that they could analyze the data using Big data techniques and uncovering insights into user behavior and digital patterns. They have been using this information for optimize their search engines and drive data-driven decision-making.

With a vast amount of data and the nature of machine learning algorithms requiring a huge amount of computing that a general-purpose CPU can not supply. This poses a problem to companies doing Machine Learning and Big Data analysis in the early stages.

In the 1990s, engineers created a specialized compute unit called the GPU (graphics processing unit) to improve video game graphics. Today, GPU cloud servers are used for many computational tasks, including computer clusters, artificial intelligence systems, and deep learning, to enhance the performance of parallel systems. The GPU presented a new paradigm on parallel computing architecture. It was presented as it could run simultaneous execution of two or more parts of a program. This approach combined multiple smaller computers with large memory banks and high-speed processors to form a single, high-performance supercomputer.

GPUs are good at doing lots of simple computations in parallel. This fits perfectly with the workload required for training neural networks like Convolutional Neural Networks (CNN).

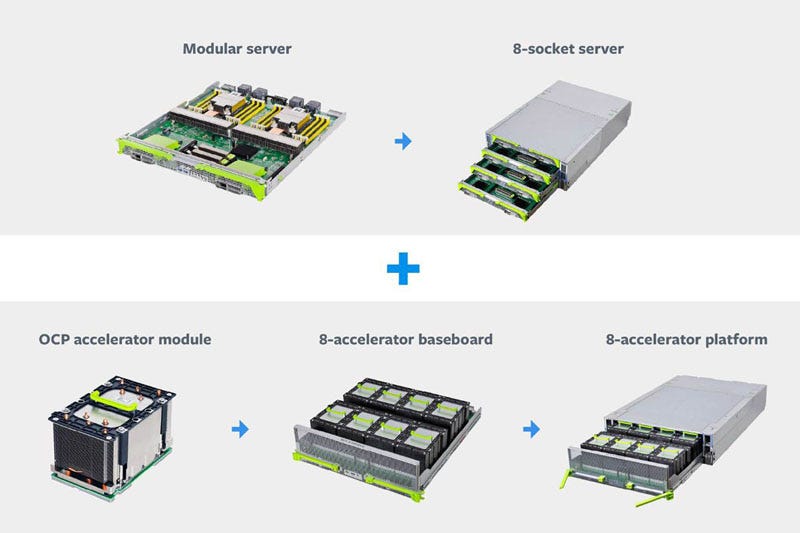

Today, there are many of GPU vendors. Moreover companies had created their own custom hardware for optimize AI inference. One example, is the Facebook's hardware platform. The Facebook Zion is comprised of eight CPUs and eight accelerators. The module is disaggregated insofar as the server CPUs are in modular sleds and the accelerators sit in another modular system. The Zion system components are show in the picture below.

This is perfectly fine in cloud environments, where you have the possibility to choose the architecture, capacity and model of CPUs and GPUs achieving a homogeneous stack for training and inference Machine learning pipelines. However, moving ML inference and training to the edge introduces several new challenges.

In the following section, I will discuss these challenges in detail and provide practical recommendations for effective AI deployment at the edge.

AI at the Edge

The main idea of running Machine Learning at the edge is to reduce latency on the output. Moreover, there are cases where the devices deployed in the field does not have a reliable internet connection and inference must happens on the device without depending on the internet. One example of AI at edge is the Iphone feature to unlock your Iphone with your face as an unique identity key.

You don't want the users end up in a situation that they can't get access to their phones because there is not internet connection. Moreover the capture of the image (your face), converted into the inputs for the AI model and the inference must happen really fast.

Engineers have worked to overcome the challenges of enabling AI at the edge. For example, the iPhone's face unlock feature benefits from Apple's AI ecosystem. Since 2017, Apple has included its own mobile GPU, simplifying development for engineers by providing a homogeneous architecture when deploying apps to iPhones.

The Android ecosystem presents a different scenario. There are over 25 mobile chipset vendors which each mixes and matches its own custom-design components. With multiple chip vendors producing CPUs and GPUs for Android devices, the ecosystem is highly fragmented. Engineers aiming to deploy AI at the edge face significant challenges in targeting the various architectures.

Vulkan which is a low-overhead, cross-platform API for high-performance 3D graphics and is a successor to OpenGL and OpenGL ES, aims to provide a layer abstraction to gain control of the stack. Today, early adoption of Vulkan is limited but growing over the years.

This is the challenge with vendor diversity. A holistic approach is to develop ML models capable of running effective inference using only CPUs. This eliminates the need to ensure the model performs well across multiple GPU architectures. However, relying solely on CPUs introduces its own challenges, requiring significant software-level optimizations to make the model more efficient.

Techniques for AI at the Edge

Engineers also face implicit challenges due to the constraints of IoT devices. These devices have limited CPU, GPU, and memory resources, which makes certain techniques necessary to improve AI performance at the edge. The following techniques are recommended:

Data parallelism: It is common to split the data and send them in parallel to multiple CPUs/GPUs to make the inference faster.

Model parallelism: Some ML frameworks allow you to split the model having each CPU/GPU core process its own part. This approach is more complex and not all frameworks and hardware vendors allow it.

Heavy initialization of ML/DL model: The recommendation is to perform initialization once per process/thread and reuse them for running inference.

Under-utilization: It is common to land in a situation that the tasks handled by the CPU could take 100% of its capacity, while underutilized the GPU.

Out-of-order outputs: When an order of the outputs are required in a parallelized system, then an extra task is required like indexing and reordering.

Multiprocessing: Several languages for performing Machine Learning allow you to use multiprocessing techniques to take advantage of parallelism with GPUs. It is worth to mention that not all Deep Learning frameworks supports multiprocessing.

Training at edge: In some cases, the IoT device may need to learn from data, requiring both ML training and inference to occur on the constrained device. One common approach is to use a model with frozen weights and perform fine-tuning, which is less computationally demanding.

Optimizations: Model architecture search, weight compression, quantization, algorithmic complexity reduction, increase of training data, refining features sets and micro-architecture specific performance.

The growing importance of deep learning-based applications presents both exciting opportunities and complex design challenges at the edge. TinyML, for instance, is a new approach that simplifies the integration of AI at the edge, enabling applications where sending data to the cloud is impractical. Fortunately, hardware has advanced to the point where real-time analytics are now feasible. For instance, the Arm Cortex-M4 processor can perform more FFTs (Fast Fourier Transform) per second than the Pentium 4, while consuming significantly less power. Similar improvements in power and performance have been made in sensors and wireless communication. TinyML allows us to leverage these hardware advances to create innovative applications that were previously impossible.

To know more about TinyML and AI at the Edge, I recommend the following resources: